Testing Face Recognition Algorithms on Algorithmic Bias

| Sieuwert van Otterloo |

Artificial Intelligence

One of the most interesting research projects of ICT Institute was the thesis work by Jesse Tol. Jesse investigated whether face recognition algorithms do less well when used on people who wear headgear (scarfs, caps, hats). It is important that algorithms perform equally well when tested on headgear, since there are religious and cultural minorities who prefer to wear headgear in public. It is important that AI algorithms do not discriminate against such minorities.

Why this research

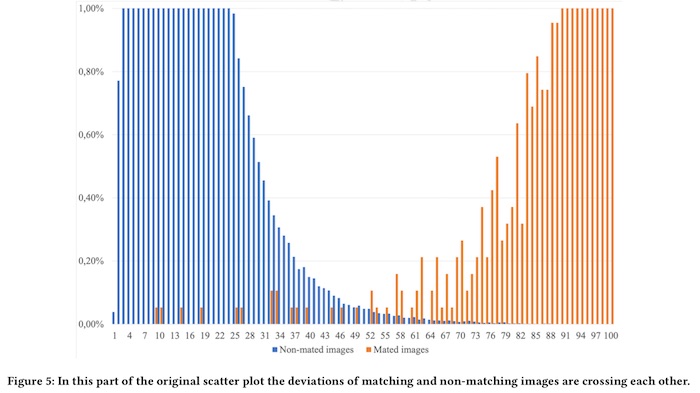

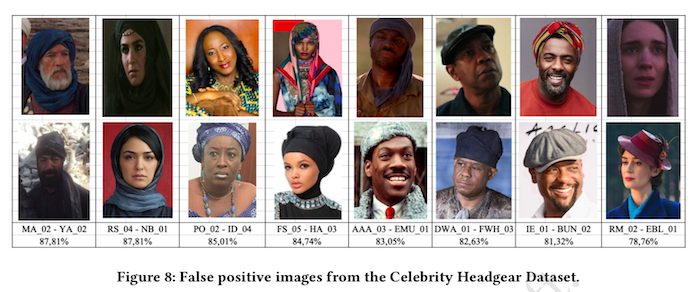

It is well know that Artificial Intelligence algorithms must be tested thoroughly before use. There are several AI related risks described in this article. Several facial recognition algorithms have an algorithmic bias against women and people of colour. Luckily AI researchers are trying to solve this issue, for instance by introducing an AI impact assessment. When Jesse Tol approached to find an interesting AI and ethics topic, we decided to extend the previous research into algorithmic bias by looking at new types of bias, linked to the way people dress. Jesse looked at several algorithms and known test sets, and created his own new data set: the celebrity headgear dataset. This is a data set consisting of publicly available publicity photos of celebrities (mostly from IMDB) to avoid using private pictures without permission. This dataset was used to evaluate the Amazon Recognition algorithm. The image above shows several test images: images of different people that are nevertheless similar and tend to confuse algorithms. The chart below shows the confidence score of both matching(red) and non-matching(blue) image pairs. As you can see, several blue image pairs get a higher score that matching (red) image pairs. This means that the algorithm tested is not perfect and is likely to have a small bias against people with headgear.

Thesis result

Jesse Tol successfully defended the thesis in July 2019 at the University of Amsterdam and obtained the title Master of Science in Information Studies. The thesis supervisors were Dr. Frank Nack and Dr. Sieuwert van Otterloo. The full title of the thesis is “Ethical Implications of Face Recognition Tasks in Law Enforcement”. An updated version was also created with a more general focus, titled: “Celebrity Headgear Dataset: An Evaluation of Face Recognition Algorithms on Algorithmic Bias”. Jesse also presented the the result publicly at a joint AI and ethics seminar together with Dr. Stefan Leijnen in Utrecht.

Abstract

In this paper, we investigate how facial recognition algorithms can be tested for algorithmic bias. Specifically, we test for bias against subgroups of people that wear headgear, for instance, for religious or cultural reasons. To evaluate for such bias, we introduce a new dataset, the Celebrity Headgear Dataset (CHD). The CHD includes 767 images of 144 individuals. As an example of how this dataset can be used for evaluating algorithms for accuracy and bias, the performance of the Amazon Rekognition algorithm is examined. The evaluation provides several examples of incorrectly classified image pairs and thus demonstrates the need to test facial recognition algorithms for algorithmic bias and the need to have manual review steps in any facial recognition applications where inaccurate classification could have negative benefits for individuals.

There full thesis is available for download here: Jesse Tol – Ethical Implications of Face Recognition Tasks in Law Enforcement.

Dr. Sieuwert van Otterloo is a court-certified IT expert with interests in agile, security, software research and IT-contracts.