A checklist for auditing AI systems

| Sieuwert van Otterloo |

Artificial Intelligence

As the use of AI increases, the potential impact of using wrong AI system increases as well. It is therefore recommended and often required to audit AI systems. We have talked to several IT auditors and privacy experts to make a checklist on what should be considered when auditing an AI system.

About the checklist

There are many different AI techniques and also many different AI applications, so any audit plan should he based on a risk analysis. Having said that, the following risks are relevant for many AI systems and are thus a good basis for the audit plan. The checklist is organised around four steps: development, validation, deployment and long term consequences. The checklist is based on our own research and experiences but also on three external sources: the GDPR, the EU Ethics guidelines for trustworthy AI and the Guidance on AI and data protection by the British supervisor ICO. The checklist consists of four sections:

- Development

- Validation

- Deployment

- Long term consequences

Sources and alternatives

Some interesting sources related to this checklist are:

- NOREA Guiding Principles Trustworthy AI Investigations

- The Stanford AI audit challenge

- The ECP AI Impact Assessment (AIIA)

- The UK ICO’s audit guide: A Guide to ICO Audit: Artificial Intelligence (AI) Audits

- The Dutch AP’s report: Rapportage Algoritmerisico’s Nederland

- The news articles mentioned in our overview of AI specific risks.

1 AI development

Data sources

It is important to check what data has been used in the development of an AI system, and whether this data can be used. The GDPR restricts the use of personal data to the purpose for which it has been collected. A lot of business data is owned by specific business and protected by intellectual property rights. Data collected from the internet is often not fit for purpose. Many AI systems also require data to be annotated with correct answers and such annotation is often missing.

Secure hosting

AI systems use and produce sensitive data. These AI systems need to have proper information security. It is often important to check that the cloud/hosting provider used is reliable enough, that the system meets OWASP requirements and interfaces are secured. One should also carefully consider whether sharing data with cloud AI services is acceptable.

Impact assessment

Note that it is required by GDPR to conduct a data protection impact assessment, or DPIA for privacy enthusiasts. In the DPIA one should lists risks and describe controls, including measures for privacy by design. In an audit we would check if a DPIA is completed, and whether the controls and advice from the DPIA has been implemented. One should also check that no more personal data is collect than necessary and that data is deleted once no longer useful.

2 AI validation

Before AI systems can be used, one must measure the performance on the system on a validation data set. The following are important points to check:

Validation data set

The validation dataset should be large enough to get a detailed impression of the performance. The dataset should also a wide variety of contain realistic test cases. The validation data should not be used on during the AI training phase.

Bias / discrimination

One should not just measure overall performance, but als make sure that the algorithm is fair for each subgroup of people. To do this, performance should be measured for all relevant subgroups: gender, age, cultural background, nationality and sometimes even skin-colour. One should measure performance for all possible subgroups (e.g. female children, men over 30, women with international background) and the performance for each subgroup should be good and similar to the overall performance. A good example of detailed validation is in this article on algorithmic bias.

Information leakage

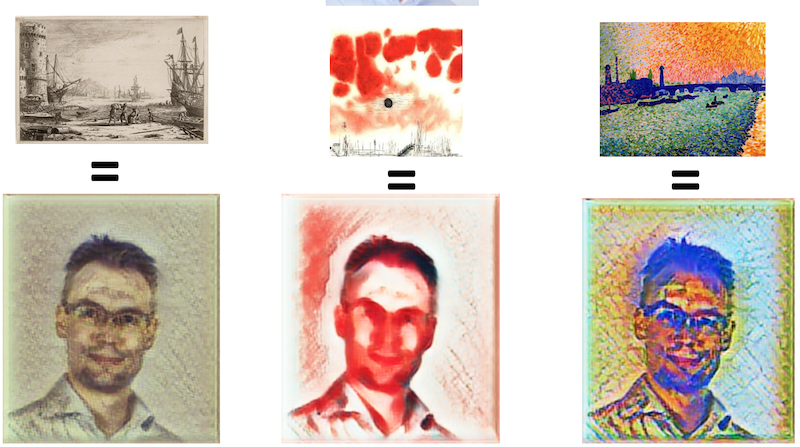

Some AI systems produces text or images: chatbots, text analysis and summary systems, deep fake image generators, and creative software such as the style transfer example used below (see our summer school in AI). These systems have been trained using large amounts of existing data, and the idea is that the output is a blend of many different inputs, such that the input is not recognizable. This does not always work: it is possible for AI systems to reproduce part of the training data. This would be a security breach, a personal data breach or infringement of copyright.

Explainability

It is important for all IT systems to have a certain level of explainability: the decisions by the system should be understandable to the company itself, to supervisors and in some cases to the people affected by the decision. Some AI algorithms, such as neural networks, are hard to explain if no additional analysis is done. The auditor should check if outcomes are sufficiently explainable. If not, techniques such as LIME and SHAP are often used to make algorithms more explainable.

3 Deployment

Transparency

The use of personal data should always be transparent to the people whose personal data is used. You must make it clear how and when data is used, for instance in a privacy statement. Even when no personal data is used, it can be important to communicate clearly to people how AI is used and how they can check outcomes and report issues.

Human in the loop

In many cases, it is not allowed to implement fully automated decision making. A real person should supervise the AI system and its decisions. Different deployment models exist: The supervision could be on each deicsion or on a sample of decision. The manual check can be done before the decision is used or shortly afterwards.

Logging and versioning

Many companies that use AI, have an agile development strategy, where models are often changed, updated, retrained or finetuned. Multiple models could be in use in parallel and changes could be made daily. In such a situation it is important to keep track of which model has been used for which decision, so that decisions can be traced back to the actual model and data being used. Proper logging is also a requirement in many security standards such as OWASP. There are good practical tools that can help keep track of versions and decisions: Utrecht based Deeploy (https://www.deeploy.ml) is a dedicated solutions for precisely this problem. See also this list for more available tools.

4 Long term consequences:

Social impact

The use of AI can have unintended consequences, both positive and negative. It can lead to faster feedback, but also to inability to handle creative, new solutions. It can make a decision proces more consistent, but can also lead to less diverse selections. The quality of decision making can go up in the ling term, but you might use expertise in the long term. One should consider such effects and then implement AI in such a way that there is a net positive effect for all parties involved: customers, employees and society.

Model drift

The performance of AI systems can change over time, due to two reasons. Sometimes the algorithm is changed based on input from users. Often this results in improvements, but in some case algorithms will replicate user errors or even abusive language. In other cases, circumstances change and answers that were correct (buy warm sweaters) is no longer optimal. Either way one should audit the mechanisms for continuous change, the controls on user input and the frequency of quality control.

Img: Steve Johnson via Unsplash

Dr. Sieuwert van Otterloo is a court-certified IT expert with interests in agile, security, software research and IT-contracts.